Will ChatGPT take our jobs? The truth is that it gets an awful lot right and it gets an awful lot wrong!

Communications professionals, writers, journalists and researchers all seem to be asking if Chat GPT and other new AI platforms will take their job. I’ve been using AI writing apps for a couple of years now to help overcome ‘writers block’, sketch out ideas and, well, just for fun! So, what do I think? Will AI take my job? Here’s a little analysis that I did in January 2023.

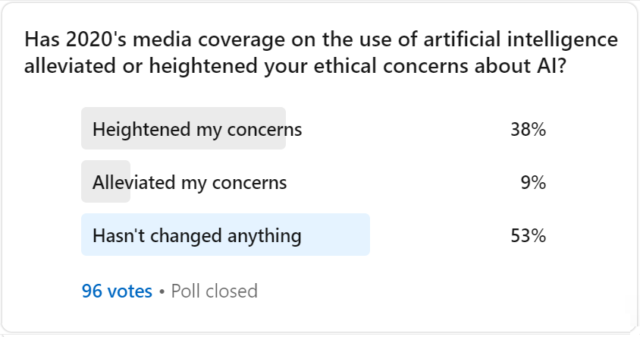

A recent survey survey of UAE residents by communications consultancy duke+mir and research firm YouGov, found that 55% were concerned about losing their job to AI (and this went up to 66% in the under 25 age group). It’s a notion that tech firms and some governments have done their best to deride over the last five years, but the evidence is very clear: artificial intelligence allows companies to do more with less – and that means less people too.

It is true that new technologies, including AI, are also creating new categories of jobs, but that’s not much of a consolation if you don’t have, or can’t acquire, the new skills required to take one of those jobs. This said, there are many things that AI simply cannot do, because those jobs require human thinking, dexterity or other nuances that are particular to human beings.

However, for some, the arguments are a little too academic. Most people don’t know much about how AI works, how it’s used, or what the benefits and risks might be. Many have relied on tech firms, scientists, journalists and government spokepeople for insights on how AI technologies will affect them. Now, suddenly, this may have changed a little.

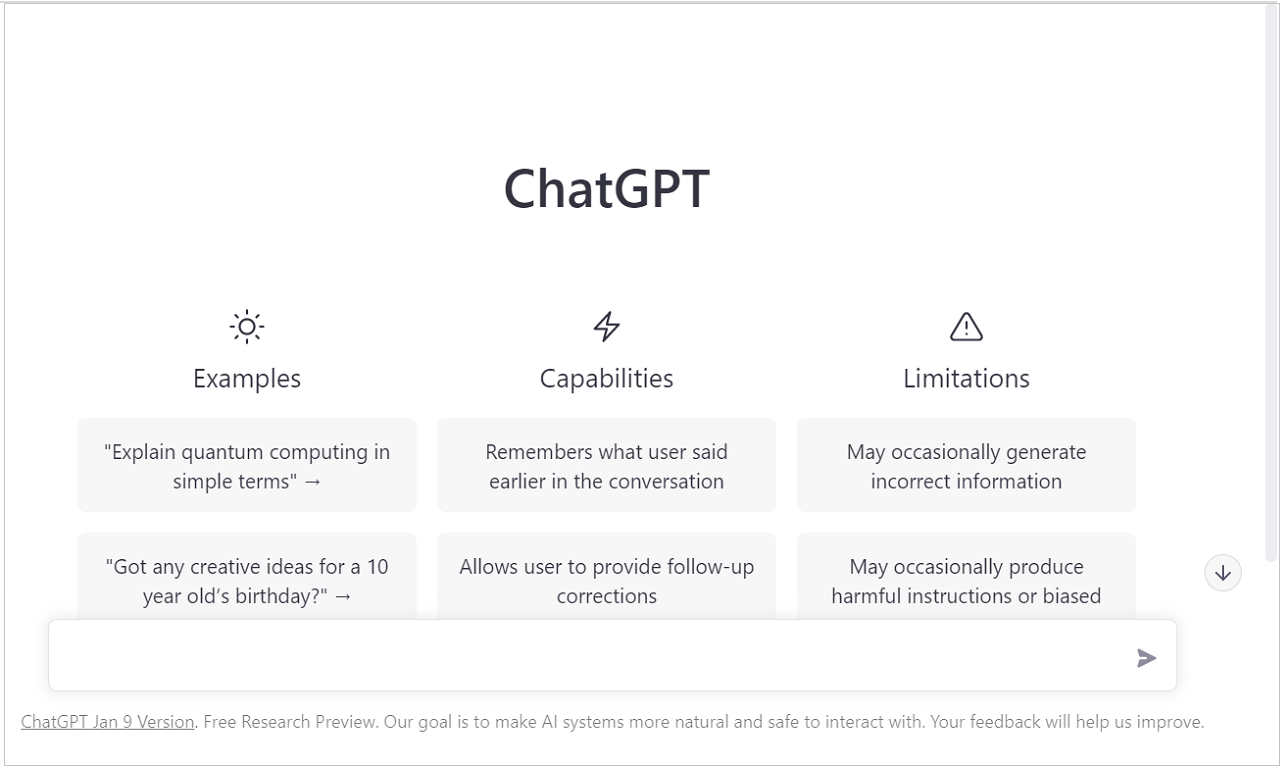

With the public beta release of the latest versions of OpenAI’s conversational AI chatbot ChatGPT. Now, anyone with an Internet connection can play with AI, discover its potential, explore its boundaries and ask it about the future.

So, just for fun, I asked ChatGPT to write this week’s weekly article. Will it take my job? You be the judge!

I gave ChatGPT the following instruction:

This is the first answer that the chatbot returned. It is unedited. I’ve fact checked this and put my comments under the article.

The Gulf Cooperation Council (GCC) states, which include Bahrain, Kuwait, Oman, Qatar, Saudi Arabia, and the United Arab Emirates (UAE), have been actively investing in and adopting artificial intelligence (AI) and other emerging technologies in recent years. These efforts are driven by a desire to diversify their economies, improve efficiency and productivity, and enhance the overall competitiveness of their countries.(1)

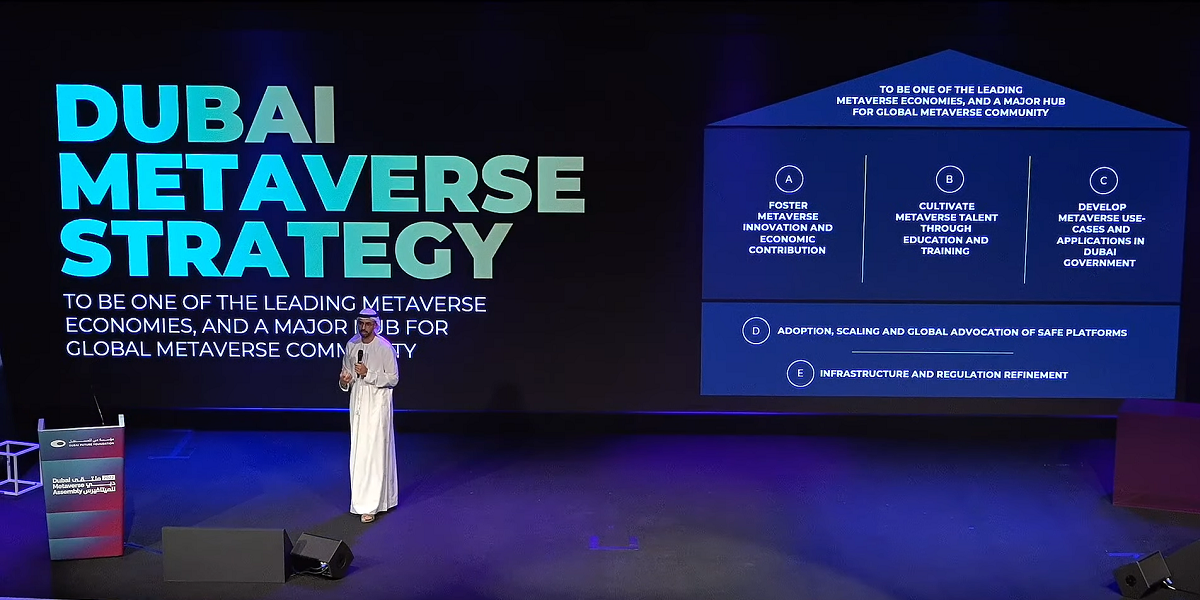

One key trend in the adoption of AI in the GCC states is the increasing focus on the development of smart cities. For example, the UAE has launched several initiatives aimed at creating smart cities, such as the Dubai Smart City project and the Abu Dhabi Smart City project. These initiatives aim to use technology to improve the quality of life for citizens, enhance urban planning, and create new business opportunities.(2)

Another trend is the use of AI in the healthcare sector. For example, in Qatar, the government has launched the Qatar Genome Project, which aims to use genomics and AI to improve the diagnosis and treatment of diseases. Additionally, Saudi Arabia has launched the Misk Medical AI Program, which aims to use AI to improve the efficiency and effectiveness of healthcare in the country.(3)

Another key trend is the use of AI and other emerging technologies in the financial sector. For example, the Central Bank of Bahrain has launched the Bahrain FinTech Bay, which aims to promote the development of fintech and other innovative technologies in the country. Additionally, the Dubai International Financial Centre (DIFC) has launched the Dubai Blockchain Strategy, which aims to use blockchain technology to improve the efficiency and security of financial transactions in the UAE.(4)

In addition to these specific examples, GCC countries have also been investing in other areas such as energy, transportation, and retail. For instance, in Oman, the government has been working with companies like Siemens to implement AI-enabled systems in the oil and gas sector. In Saudi Arabia, the government has been working to develop autonomous vehicles and drones for transportation and delivery purposes.(5)

Overall, the adoption of AI and other emerging technologies in the GCC states is being driven by the desire to improve efficiency and productivity, diversify their economies, and enhance the overall competitiveness of their countries. These efforts are also supported by significant investments in research and development, as well as the development of supportive ecosystems, such as innovation hubs and accelerators. As a result, we can expect to see continued growth in the adoption of AI and other emerging technologies in the GCC states in the coming years.(6)

It is worth noting that the GCC countries have a lot of potential to be the leader in AI, but there is also a lot of work that needs to be done to overcome challenges such as lack of data privacy laws and shortage of local talent. It is important for the GCC governments to work on these challenges and to also focus on building an ecosystem that supports innovation, investment and collaboration in AI.(7)

Fact check & analysis

- Paragraph one is correct and provides a useful introduction.

- Paragraph two provides a useful example and is essentially correct, but contains a little misinformation. Dubai Smart City project was announced in 2014, but since then has developed into several iniatives with different names such as Smart Dubai, which is now Digital Dubai. I don’t remember there ever being an “Abu Dhabi Smart City” project by name.

- Paragraph three is also useful, although the language is repetitive. However, again, ChatGPT inserts its own names. The Qatar Genome Project is the name of an actual project. As far as I know, “Misk Medical AI Program” is not, although Saudi Arabia’s Misk Foundation does have medical programs.

- Paragraph four contains misinformation. Again the content topic here is useful for the context of the article, but Dubai Blockchain Strategy was not launched by DIFC, it was announced by Smart Dubai and Dubai Future Foundation in 2017.

- These paragraphs are now following a formula, but even so paragraph five is a useful contribution to the report. Although, I would say that the claim that Saudi Arabia is “working to develop autonomous vehicles” is overstated and possibly misleading.

- Paragraph six is correct, although I would prefer if it had also mentioned some examples to make it more specific.

- Paragraph seven is correct and useful.

So, should I give up, or stick around for a while longer? ?

Read more on this topic:

This story was originally published on Linkedin