It’s becoming a new communications quandary – When do you tell your audience that you’ve used AI in creating something?

When do you announce proudly that your new creation was produced using the latest AI technologies? When do you need a disclaimer? And is it ethical to keep quiet about it altogether? These are questions that that I’ve given quite a lot of thought to over the past couple of years.

At this point, two year’s after the launch of OpenAI”s ChatGPT, it’s not hard to figure out that very soon everyone is going to use Generative AI tools to help them in everyday communications, writing, and to produce creative work.

However, I believe that we are still at the messy stage of GenAI!

The messy stage of GenAI!

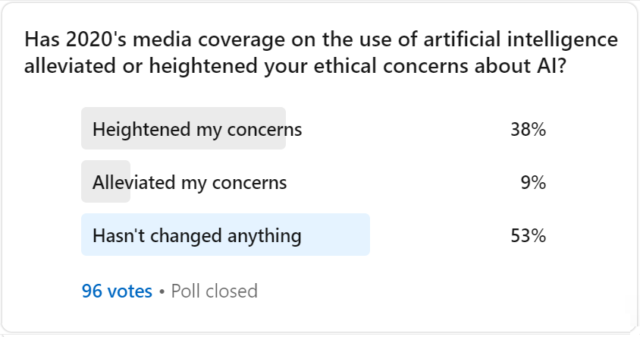

The quality of GenAI generated content still varies greatly due to differences in technology platforms, the skills of the end user and the type of job at hand. This means that we’re going to continue to see a wide variety of content at varying levels of quality and effectiveness and that most of us will be able to identify a high percentage of AI content when we see it. Spotting AI content is becoming a sort of superpower! Once you begin noticing AI content, you just can’t stop seeing it. So, in this environment, it could be a judgement call deciding when to be proud of your AI content and tell everyone what you’ve done, and when to keep quiet.

Spotting AI content is becoming a sort of superpower! Once you begin noticing AI content, you just can’t stop seeing it.

There are also, of course, ethical dilemmas which accompany AI content, including how to decide when AI has had a positive impact (added value) or a negative one (e.g. done someone out of a job). Then there is copyright, fair use of data, and the potential for AI plagiarisation.

Timing

As with most things concerning communications, what you say and don’t say has a lot to do with timing. Firstly, many of the issues that we wrestle with today, could be a thing of the past in five years time. For example, the negative connotations to your multi-million dollar business cancelling your photography agency’s contract, because your going to save money by creating all your catalogue shots using AI. This is a very present day issue. In ten years time, whatever photographers remain in business will have adjusted to the new reality and no one will bat an eyelid if you never hire an agency of any kind, ever again.

Secondly, like any other communications requirement, with a little forethought and planning you should be able to work out what messages and policies to put in place now when talking about AI in today’s environment and then map out how these might change over the next year or two, according to potential changes in perceptions and reputational risks. Just because AI has some unknowns, it doesn’t mean that it can’t be planned for.

A little empathy goes a long way

The biggest risk, as usual, is not taking into account the perceptions of employees, customers and other stakeholders in your use of AI, and communications about it. Part of the problem here is that many organisations these days have a team of people that are well-versed in AI, but this often does not include the communications and marketing team!

Whilst all your marketing counterparts may be jolly impressed that you created your latest campaign in one day and made it home in time for tea, your customers are likely to care more about your message and what that campaign means to them.

So, does one announce “AI campaigns”? For me, it’s all about whether this helps meet the goals, resonates with the target audience and doesn’t risk upsetting other audiences. Whilst all your marketing counterparts may be jolly impressed that you created your latest campaign in one day and made it home in time for tea, your customers are likely to care more about your message and what that campaign means to them. It’s easy to let the ‘humble AI brag’ creep into communications because we all want to be seen moving with the times, but unless there’s a clear benefit for your key audiences, it really doesn’t belong there.

Transparency and authenticity

As with many corporate reputation risks, reviewing how and where more transparency should be offered on AI usage can help mitigate some of that risk. For example, making it clear that your website chat support is responded to by an AI chatbot and not a human, can help avoid customers making false assumptions (and perhaps being unnecessarily annoyed or upset).

What about marketing content? Should you be transparent about what content was created using AI? My experience is that the more personal the communication, the more sensitivity there is. I may not care if your $100,000 billboard was created entirely by AI, but when I when I receive a personal email from you, I probably expect more authenticity.

A personal perspective

Last year, I began labelling my LinkedIn content to show where and how I used AI. The use of ChatGPT and other Generative AI tools to write posts, articles and comments has started to proliferate on LinkedIn. As you have probably seen yourself, sometimes people use GenAI to great effect and sometimes content lacks context, nuance and the human touch that makes it engaging. So, I’ve found that posting in this environment can invite scrutiny – and occasionally accusations as to whether you are using AI to post, or not.

I would much rather that the focus remains on what my content communicates, rather than what role AI played.

I use AI extensively when planning, creating and repurposing content, but I still create more content with little or no help from AI. Although AI-generated content rarely accounts for more than 50% of any written work, I don’t really want my audience to either assume that I’m using AI to generate everything, nor to assume that I don’t use AI at all. Additionally, I would much rather that the focus remains on what my content communicates, rather than what role AI played. So, I now add a footnote at the end of all my LinkedIn posts and articles, which mentions whether I’ve used AI and what I’ve used it for.

If you are guided by your goals, your audience, the context and the potential risks, then deciding on how and when to communicate your use of AI can be very straightforward.

This article first appeared in my monthly AI First newsletter.

Image credit: Drazen Zigic via Freepik.